During training time, these composer and instrumentation tokens were prepended to each sample, so the model would learn to use this information in making note predictions. We created composer and instrumentation tokens to give more control over the kinds of samples MuseNet generates. Generations will be more natural if you pick instruments closest to the composer or band’s usual style.

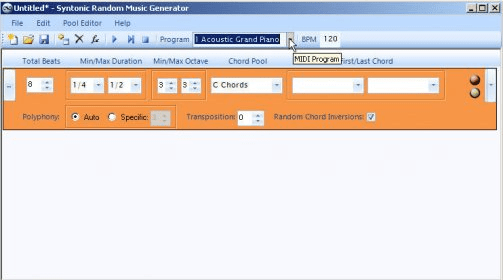

Random music generator algorithm full#

The model manages to blend the two styles convincingly, with the full band joining in at around the 30 second mark:

Here the model is given the first 6 notes of a Chopin Nocturne, but is asked to generate a piece in a pop style with piano, drums, bass, and guitar. Since MuseNet knows many different styles, we can blend generations in novel ways. MuseNet uses the same general-purpose unsupervised technology as GPT-2, a large-scale transformer model trained to predict the next token in a sequence, whether audio or text. MuseNet was not explicitly programmed with our understanding of music, but instead discovered patterns of harmony, rhythm, and style by learning to predict the next token in hundreds of thousands of MIDI files. We've created MuseNet, a deep neural network that can generate 4-minute musical compositions with 10 different instruments, and can combine styles from country to Mozart to the Beatles.

0 kommentar(er)

0 kommentar(er)